By Craxel Founder and CEO David Enga

August 17, 2025

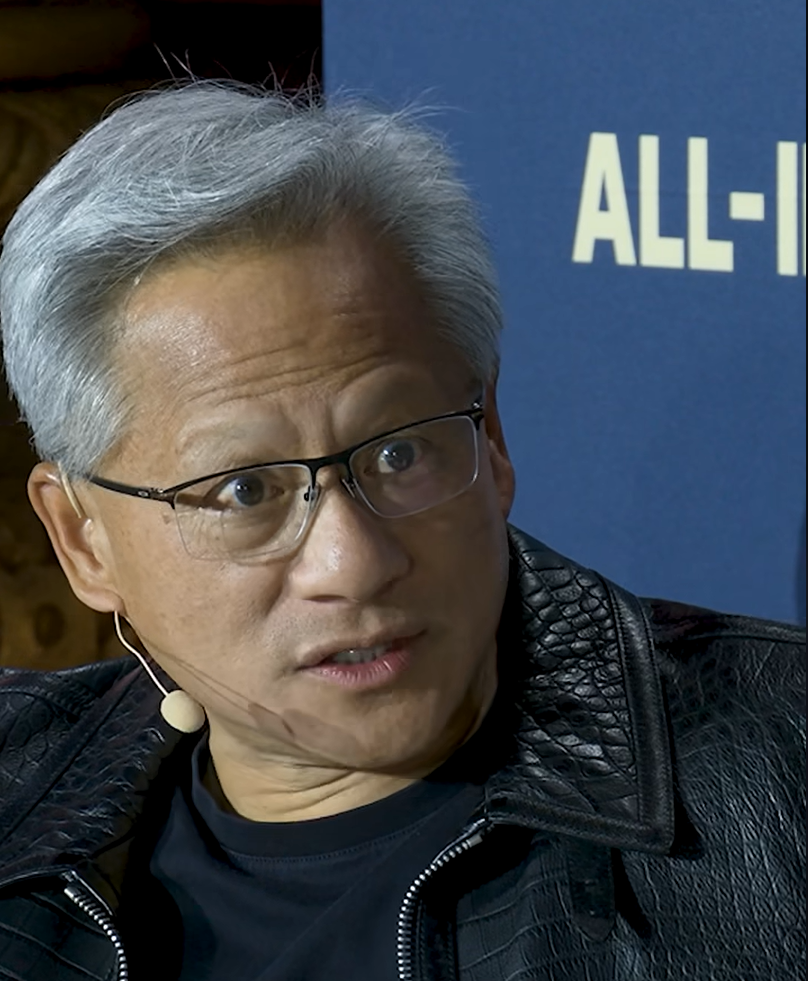

Big fan of the All-in Podcast, but there is one really bad assumption being made around AI.

The assumption is that because LLMs require massive amounts of compute today that they will continue to do so. There is NO provably hard computer science problem underneath attention or that requires layers upon layers of deep neural networks to predict tokens. In fact, the brain uses a very tiny amount of energy to do what it does - why is there an assumption that predicting tokens should be computationally expensive?

This means there is substantial risk that there is an algorithm out there that will be exponentially more computationally efficient than today's LLMs. If discovered, what happens to all the investment in GPUs, data centers, and electricity production? Likely, much of it will be used for higher valued purposes than predicting tokens, but in the meantime, there could be a financial shock. Remember the short-term upheaval from Deepseek's very mild improvement?

What does this mean for the AI arms race? It means that it might not be a data center construction / electricity production / chips race. It may come down to algorithmic innovation. Now how in the world would such an algorithm be protected? If patented or open sourced, it'll be out there for the world to see. If kept as trade secret, adversarial countries will be coming after it. What company will be able to defend itself? Most likely, everyone's going to end up with the "math".

As the inventor of multiple algorithms that people thought weren't possible, I think this is more likely than not to happen. How does the U.S. win an AI arms race if all that "winning" requires is an algorithm? The U.S. government should really do some strategic analysis around this possibility. Maybe the next "Manhattan project" should be finding the algorithm.

https://lnkd.in/ezx_mStX